The Fundamental Rights Impact Assessment (FRIA) is one of the key pillars of the European Union’s Artificial Intelligence Regulation (AI Act). Its purpose is to ensure that high-risk AI systems do not compromise individuals’ fundamental rights, such as privacy, equality, freedom of expression, or non-discrimination. Although it may seem like a bureaucratic procedure, the FRIA represents an opportunity to strengthen ethical governance and the credibility of any automated system.

Definition and purpose of the FRIA

The FRIA is a structured assessment process that organizations must conduct before implementing a high-risk artificial intelligence system. Its main objective is to identify, document, and mitigate potential negative effects that such technology may have on individuals’ fundamental rights.

Through this assessment, organizations can:

- Anticipate risks of discrimination or bias in automated decisions.

- Improve system transparency.

- Strengthen the trust of users, clients, and authorities.

Legal framework supporting it

The AI Act specifically requires the FRIA for AI systems considered high-risk, such as those used in employment, education, finance, justice, or healthcare. This obligation is laid out in Article 29 of the Regulation, which states that users of these systems must conduct an impact assessment before use.

Additionally, it aligns with other regulations such as the General Data Protection Regulation (GDPR), which already includes privacy impact assessments (DPIAs). The FRIA complements this by expanding the analysis to all fundamental rights, not just data protection.

Risks it aims to mitigate

The use of artificial intelligence, if not properly supervised, can lead to significant consequences for people’s rights. Some risks the FRIA seeks to anticipate and minimize include:

- Algorithmic discrimination: automated decisions disproportionately affecting certain social or ethnic groups.

- Lack of transparency: AI systems that do not allow understanding of decision-making processes.

- Privacy violations: collection or use of personal data without adequate safeguards.

- Inequality of access: limitations or biases in the provision of public or private services.

A well-executed FRIA acts as a preventive shield against these risks and reinforces the legitimacy of any AI-based initiative.

Who is responsible for conducting a FRIA?

The responsibility for carrying out a Fundamental Rights Impact Assessment mainly falls on those who use high-risk artificial intelligence systems. However, fulfilling this obligation requires collaboration from various internal and external actors. Clearly identifying the roles involved is key to effective and compliant implementation with the AI Regulation.

Responsibility of AI system users

Organizations that use high-risk AI systems are directly responsible for carrying out the FRIA, ensuring it is completed before the system goes live. While developers are not required to conduct the assessment, they must support it by providing key technical information, system design, and risk evaluations. Their collaboration is essential for the end user to build a comprehensive and regulation-compliant FRIA.

Successfully conducting a FRIA requires cross-functional collaboration within the organization. Legal and compliance teams ensure the evaluation meets regulatory standards, technical teams identify system risks, and operational departments contextualize the system’s use. This integrated approach helps produce a meaningful and effective assessment aligned with the AI Act.

When should a FRIA be conducted?

A Fundamental Rights Impact Assessment (FRIA) must be carried out before launching any AI system classified as “high-risk.” This preventive requirement is key to avoiding negative consequences once the system is operational. It’s not enough to react to potential issues: the regulation requires anticipation of risks and proactive ethical AI management.

The ideal time to conduct a FRIA is during the system’s design and implementation phase, in parallel with its technical development and before any interaction with real users or production data processing. It should also be updated whenever the system is significantly modified, applied in new contexts, or when new risks are identified.

Content of the FRIA questionnaire

The FRIA questionnaire doesn’t follow a unique format but should comprehensively address the following elements:

- System description: its purpose, functioning, data handled, and use context.

- Risk evaluation: detailed analysis of how the system might impact individuals’ fundamental rights.

- Mitigation measures: specific strategies to avoid, minimize, or manage identified risks.

- Human oversight: mechanisms ensuring human control over automated decisions.

- Traceability and transparency: how system decisions are documented and justified.

This questionnaire should be developed collaboratively with technical and legal teams and preserved as documentation for future audits or regulatory reviews.

Where can I find templates and resources for conducting a FRIA?

Although there are no official standardized forms issued by the European Union yet, there are useful resources developed by specialized entities:

- BDO Legal’s FRIA Guide: offers a practical introduction with guiding steps.

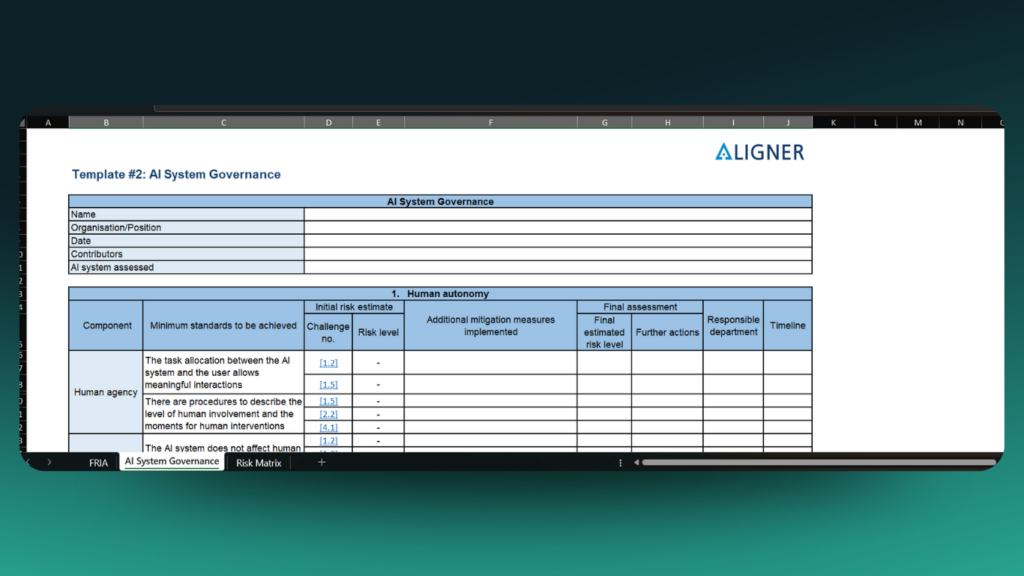

- ALIGNER Project Assessment Template: includes suggested structures for documenting the impact on fundamental rights.

- The EU AI Office will soon provide official formats that can be accessed through the AI Act Explorer.

Having these tools greatly facilitates compliance and the correct structuring of the assessment.

ALIGNER project evaluation excel template, available on their website.

Differences between FRIA and DPIA

Although FRIA and DPIA share a preventive approach, their purpose, scope, and legal basis differ significantly. Understanding these differences is essential for organizations to apply each correctly according to context.

Purpose and scope of each assessment

The DPIA (Data Protection Impact Assessment), regulated by the GDPR, focuses on risks related to privacy and personal data processing. FRIA, while also concerned with privacy, goes further: it analyzes all possible impacts of AI systems on fundamental rights, including equality, non-discrimination, and freedom of expression.

In summary:

- DPIA focuses on personal data protection.

- FRIA covers a broader range of fundamental rights.

In many cases, especially when AI systems handle personal data, both assessments are required. DPIA ensures GDPR compliance, while FRIA ensures that no other fundamental rights are violated by the AI’s use.

For example, an AI system used to evaluate job candidates must comply with GDPR (via DPIA) and also prove it doesn’t discriminate or violate privacy or equal opportunity (via FRIA).

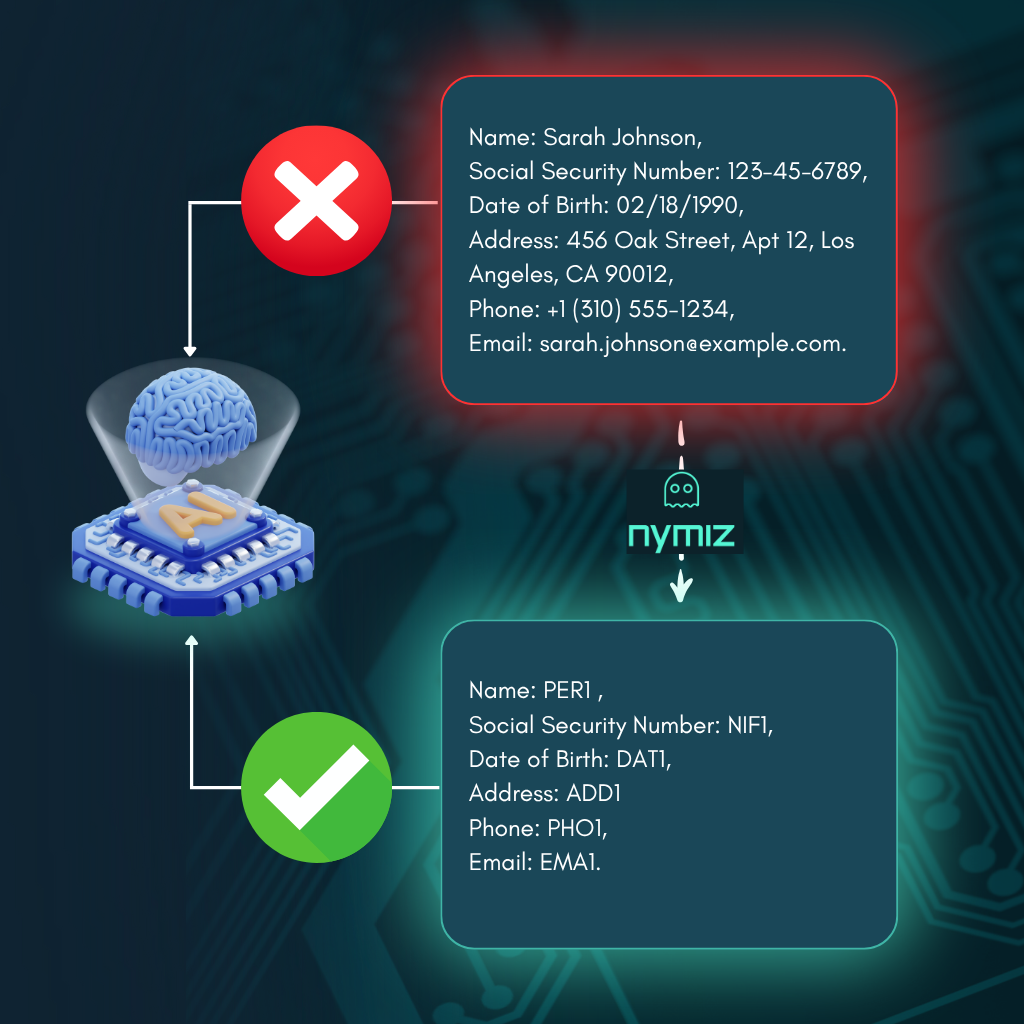

Data processing for AI systems: How Nymiz facilitates FRIA compliance

One of the key aspects of FRIA compliance is the ethical and secure processing of data used to train, test, or implement AI systems. Article 59 of the AI Act establishes that data processing in these contexts must ensure respect for individuals’ fundamental rights, including privacy, dignity, and non-discrimination.

This presents a significant challenge for organizations, especially when working with unstructured documents, databases, or large volumes of sensitive information. That’s where Nymiz comes in.

Nymiz as a key tool for privacy and ethical governance

At Nymiz, we create intelligent anonymization solutions not just to ensure privacy, but also to help organizations integrate AI responsibly—ensuring privacy by design and regulatory compliance. Our approach combines automation, accuracy, and adaptability to use context.

- Data anonymization: We convert identifiable data into irreversible formats, eliminating any reidentification risk.

- Tokenization and synthetic data: We replace sensitive data with equivalent values that preserve analytical structure without exposing privacy.

- Multi-format management: We protect data in unstructured documents, free text, or databases.

This comprehensive capacity supports robust FRIA execution by justifying technical measures adopted to reduce risks and respect privacy and proportionality principles.

Application in the legal sector: A practical use case

A law firm wanting to use generative AI to analyze case files and draft legal documents faces elevated risk if those files contain personal data. Without prior anonymization, this use could breach the FRIA and compromise client confidentiality.

By integrating Nymiz into this workflow, the firm can:

- Automatically anonymize documents.

- Preserve context and analytical value.

- Generate documented evidence of compliance.

This enables safe AI implementation that respects privacy, mitigates risks, and speeds up regulatory compliance.

Turn FRIA into a strategic solution

FRIA compliance should not be seen as a regulatory burden, but as an opportunity to strengthen organizational culture around ethics and rights protection. In this context, Nymiz positions itself as the key tool for successfully passing FRIA—enabling anonymization and auditing of large volumes of sensitive data while maintaining analytical usefulness. With Nymiz, organizations not only comply with the AI Regulation but also accelerate their digital transformation ethically and securely.

Want to see how Nymiz can help you comply with FRIA and protect your data assets? Book a personalized demo now and discover how to turn privacy into your strongest competitive advantage.