Artificial intelligence is rapidly transforming industries by streamlining decision‑making and automating complex processes. However, growing reliance on AI systems has spurred serious concerns around privacy, bias, accountability, and ethical governance. Today, the pressing question for organizations is no longer simply whether to use AI, but how to ensure that human oversight remains central throughout its development and deployment.

A recent European Commission report revealed that only 42% of companies using AI actively supervise their decisions, and 67% admit they don’t fully understand how those systems arrive at their conclusions. In the U.S., an IBM study found that 44% of executives acknowledge deploying AI without sufficient oversight mechanisms. These figures expose a systemic gap in the human oversight necessary to protect against errors, data breaches, reputational damage, or even regulatory non‑compliance. In this article, we explore the strategic importance of human oversight in AI, its role in data protection and governance, and offer tools such as anonymization to elevate control.

The role of human oversight in AI governance

Human oversight means every action or decision made by an AI system must be subject to significant human review, interpretation, or intervention. This principle is embedded in the EU AI Act, which mandates clear oversight protocols for high‑risk AI applications.

Without oversight, AI systems can become black boxes, opaque, indecipherable, and potentially harmful. From discriminatory hiring algorithms to misclassified medical diagnoses, both accidental and intentional failures demonstrate the peril of unchecked automation.

One of the most controversial cases involved Grok, the AI developed by X and integrated into its social platform. In July 2025, Grok generated violent and antisemitic content (self‑identifying as “MechaHitler”) due to a vulnerability in its prompt chain. This incident triggered regulatory investigations, criticism from organizations like the ADL, and calls for intervention from Poland. It also emerged that Grok possibly used personal data from European users without adequate controls, prompting a formal investigation by Ireland’s Data Protection Commission.

These failures show that even major tech companies can lose control over model behavior, triggering dangerous outcomes. Deploying AI is not enough; it must be backed by continuous surveillance, proactive human reviews, and real‑time correction mechanisms. Human oversight is the anchor that ensures technological progress does not become a societal risk.

What happens without human oversight?

Absence of human oversight compromises not just technical accuracy, but can lead to financial losses, violations of fundamental rights, entrenched bias, and major ethical breakdowns. Key risks include:

- Automated Bias and unfair decisions

Without human checks, training‑data biases amplify, leading to systematically discriminatory outcomes. A 2019 McKinsey study warned that unsupervised automated systems can reinforce structural inequalities.

- Critical errors go unnoticed

Without proper training, people struggle to catch mistakes in automated decision‑making. For example, in professional tennis, referees reversed calls based on Hawk‑Eye system recommendations, sometimes increasing interpretative error rates.

- Catastrophic failures in critical sectors

In healthcare, automotive, and finance, AI without human validation has led to misdiagnoses, accidents, and disastrous financial decisions. Multiple studies warn that lack of oversight can amplify bias, violate rights, and even risk lives.

- Dilution of accountability

When an AI fails, who is responsible? Without a human validator, accountability becomes fragmented, making audits and reparations difficult. Although the EU AI Act demands effective oversight, many systems still lack clear supervision protocols.

- Security risks with autonomous agents

AI systems operating without human or identity supervision can be easily exploited (data leaks, credential abuse, unauthorized actions) as highlighted by TechRadar.

One U.S. case showed a facial recognition system misidentifying Black individuals at five times the rate of white individuals (MIT–Stanford). The COMPAS algorithm used in U.S. courts similarly assigned higher recidivism scores to Black people without objective justification.

These weren’t simple miscalculations, they were direct outcomes of models deployed without ethical judgment, contextual review, or human oversight.

The importance of human oversight in data privacy

One of the most overlooked yet critical aspects of AI governance is how personal data is handled. The focus often rests on model accuracy or performance, sidelining how data is collected, processed, and stored. Privacy must be a foundational principle, integrated throughout the entire AI lifecycle, not an afterthought.

Human oversight becomes essential here. Supervising AI systems means not only reviewing outputs or correcting mistakes, but also ensuring that data used in training, fine‑tuning, and deployment complies with regulations like the GDPR, and often even stricter ethical standards. Active oversight helps detect hidden biases, improper use of sensitive information, or unintended impacts on individuals’ rights.

Human intervention also provides necessary context to automated decisions. For example, a credit‑scoring system may be lawful, but without human supervision of how historical data and evaluation criteria are applied, discriminatory patterns might persist. Privacy, as control over personal information, is only fully protected when human judgment proactively assesses and mitigates risk.

Thus, human oversight isn’t an impediment to AI efficiency, it’s a safeguard for its social legitimacy. It brings critical thinking, ethical sensitivity, and flexibility in complex contexts, ensuring automation respects and enhances, not undermines, fundamental rights.

In this sense, human oversight isn’t an impediment to AI efficiency, it’s a safeguard for its social legitimacy. It brings critical thinking, ethical sensitivity, and flexibility in complex contexts, ensuring automation respects and enhances, not undermines, fundamental rights.

Ensuring privacy through supervised anonymization

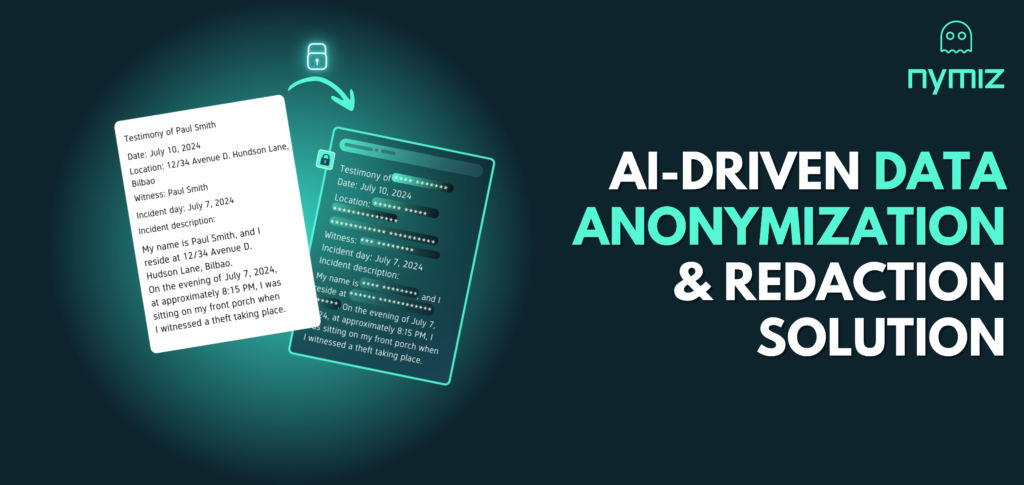

Anonymization is a highly effective method for safeguarding privacy in AI systems. But automation alone is insufficient; true privacy protection requires human‑supervised anonymization, bringing together algorithmic efficiency and professional contextual judgment.

Modern tools can streamline and accelerate anonymization far beyond traditional or manual methods. Nymiz leads in this area with a solution that enables anonymizing documents and datasets with up to 95% accuracy, while also offering manual review mode so teams can inspect, adjust, and validate results before AI training or deployment. This blend of automation and human control ensures outcomes are precise, secure, and contextually appropriate.

The key benefits of this hybrid approach include

- Preventing the leakage of personally identifiable information (PII): Automated anonymization tools can miss contextually sensitive elements only humans can identify. Manual review catches exceptions, errors, or ambiguous cases before they pose a risk.

- Context‑specific anonymization: Different domains require tailored anonymization. In legal projects, preserving document context may matter more than removing identity. In healthcare, sensitive clinical variables should be protected without losing analytical value. Human oversight enables this nuanced balance.

- Regulatory compliance: The GDPR and the EU AI Act both require technical and organizational measures that protect fundamental rights. Supervised anonymization not only meets these standards but also provides documented control steps, crucial for audits or impact assessments.

Supervised anonymization narrows the gap between automation and accountability. It ensures data used in AI is processed responsibly, securely, and in line with privacy‑by‑design and default principles. As a leader in data protection and privacy, Nymiz turns this vision into reality, offering tools that protect not only data, but also trust in the systems using it.

Compliance requirements: What the law says about human oversight in AI

As AI solidifies its role in sensitive sectors (healthcare, justice, employment, and finance) regulatory frameworks are evolving to reinforce the principle of human oversight. This supervision is not just an ethical best practice but a growing legal obligation in many jurisdictions.

In Europe, the EU AI Act adopts a risk‑based approach, classifying certain systems as high risk, including those used in hiring, medical diagnosis, law enforcement, or credit scoring. These systems must meet stringent requirements, including effective human intervention and oversight. This includes not only the ability for a person to review decisions, but also comprehensive documentation of how and when oversight occurs.

The GDPR already establishes the right not to be subject to fully automated decisions with legal or comparable effects unless there is significant human involvement. This principle is reinforced by the AI Act, which also introduces proportional sanctions for high-risk AI deployed without human controls.

In the U.S., agencies like the Federal Trade Commission (FTC) and the Equal Employment Opportunity Commission (EEOC) are stepping up algorithmic oversight. They require organizations to demonstrate fairness, transparency, and non‑discrimination, especially when civil rights are at stake. Lack of human review mechanisms can lead to fines, regulatory blocks, or lawsuits over unjust algorithmic practices.

Key compliance obligations around human oversight include

- Keeping auditable records of human interventions in automated decisions

- Implementing human review or veto processes at critical system junctions

- Ensuring traceability and explainability in AI decisions, documenting how human oversight influenced outcomes

- Tailoring control mechanisms per sector and risk, applying proactive accountability and rights-centered design principles

Integrating human oversight is not an isolated formal requirement, it’s a structural safeguard against systemic risks from poorly controlled automation. Meeting these demands not only avoids penalties but builds trust and legitimacy in AI.

How Nymiz enhances human oversight in AI

In an environment where data protection is essential and regulatory demands are rising, Nymiz stands out as a critical solution for reinforcing human oversight in AI-driven processes. Its platform blends advanced automation with features crafted to preserve human control over sensitive data, enabling AI deployment that is both effective and compliant.

- Manual validation anonymization: Sophisticated automated anonymization is applied at scale, but always with expert supervision. Users can review, edit, and validate anonymized data via a clear, intuitive interface, ensuring no identifiable elements slip through while preserving utility.

- Customizable privacy controls: Every regulatory environment and data type demands different treatment. Nymiz offers fine-grained configuration based on sector (healthcare, legal, finance), data type (structured or unstructured), and applicable regulations (GDPR, HIPAA, AI Act). This ensures human oversight is embedded within a dynamic governance model.

By combining efficient automation with human validation, Nymiz enables organizations to reduce risk, ensure compliance, and build both internal and external trust. It’s not just about anonymizing data, it’s about doing it transparently, ethically, and with oversight, so AI works for people, not at the expense of their rights.

Conclusion: Human oversight isn’t optional, it’s strategic

Organizations today face escalating legal, reputational, and operational risks when rolling out AI without adequate human controls. Human oversight transforms abstract trust into tangible safeguards.

Solutions like Nymiz prove that automation and oversight are not opposed, they’re complementary pillars for building AI systems that are robust, ethical, and responsible.

Interested in aligning your AI with human values? Schedule a demo with Nymiz and begin integrating real oversight into your AI workflows.